Why Your AI Costs Are So High: Understanding Tokens and Context Windows

Written By

Vishal Soni

Dec 2, 2025

11 Min Read

Master the economics of LLMs by understanding tokens and context windows. Learn why quadratic scaling makes context stuffing expensive and slow, and how to treat your context window as precious real estate for cost-effective AI deployment.

TL;DR for Executives: LLMs charge you for both input and output tokens (not words), and processing costs scale quadratically. Doubling your prompt length quadruples computational cost. Understanding tokens and context windows is critical for controlling AI costs and latency. The "context stuffing" strategy (dumping entire documents into prompts) is the fastest way to make your AI application unusable.

In our previous article on LLM word prediction, we established that an LLM is a prediction engine, not a person. Now, we must turn our attention to the practical realities of paying for that engine and why, in the world of AI, "more" isn't just not better, it's often much worse.

When a developer rushes in, excited to "paste the entire project documentation" into an AI model to get a quick answer, they are falling for a common misconception. They assume the model reads like a human: that reading 100 pages just takes 100 times longer than reading one page.

It doesn't.

In reality, the math of attention is more complex. Doubling the input doesn't double the cost, it quadruples it.

To manage AI in production, you must understand the two fundamental constraints of the architecture: Tokens (the currency) and Context Windows (the budget).

The Unit of Account: It's Not Words, It's Tokens

We tend to measure communication in words. LLMs measure it in tokens.

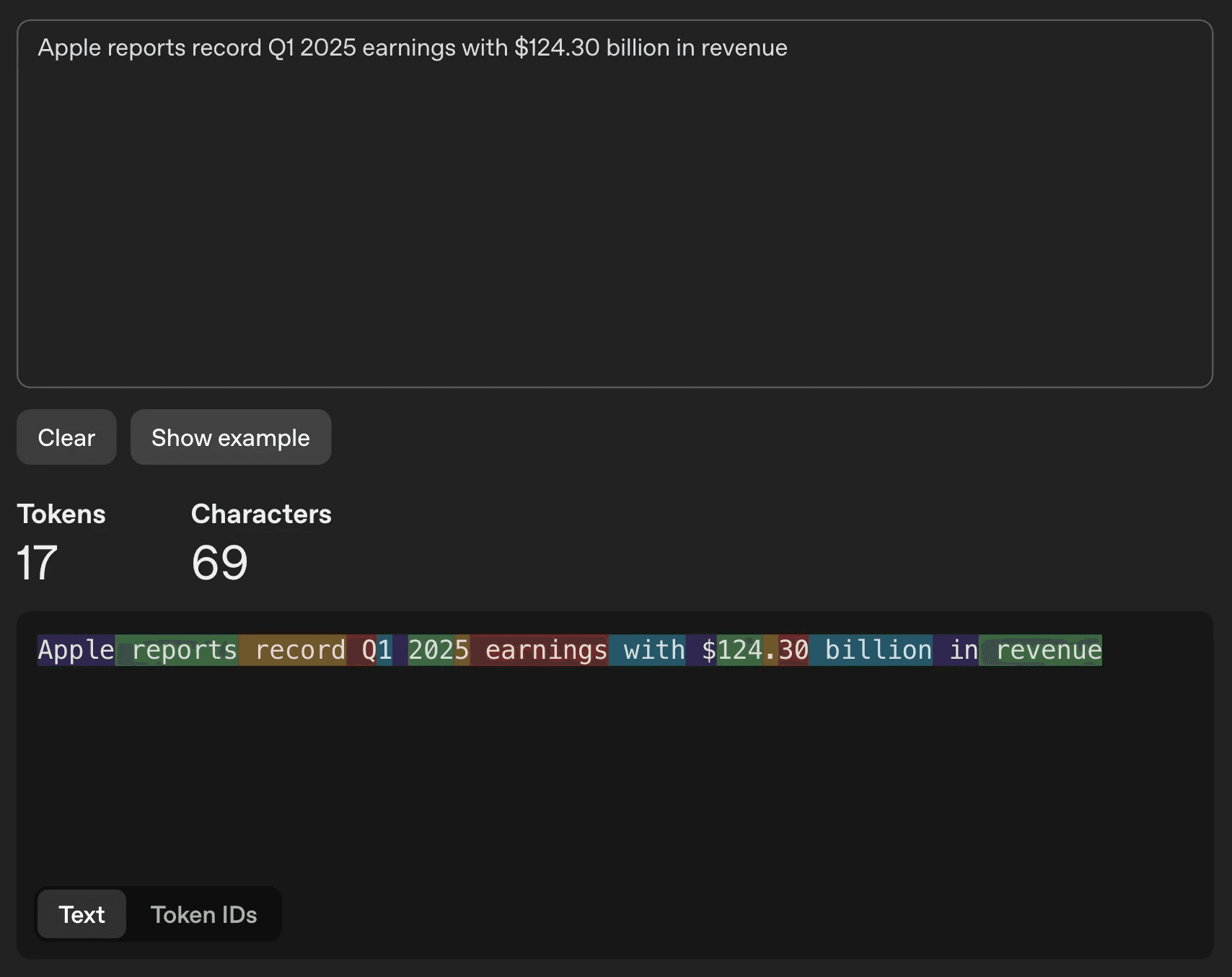

A token is the actual, granular unit of text the model processes [1]. It might be a complete word ("apple"), or often just a fragment or piece of a word ("ing," "the_"). For a rough estimate, you can usually count on 1,000 tokens translating to approximately 750 words of regular English text.

Why does this distinction matter to a business leader? Because you are billed on both input and output tokens.

You can explore token counts in real-time at the OpenAI tokenizer.

Every time you send a chat message to an LLM, you are not just paying for the answer. You are paying for the model to "read" the entire conversation history sent along with it [2]. If you have a chatbot session that has been running for an hour, you are re-sending the start of that conversation with every single new message.

This hidden "re-reading" cost accumulates silently until you receive a bill that makes no sense for the perceived volume of work. Current API pricing varies significantly by model, for instance, GPT-5 mini ranges from $0.25 per 1M input tokens to $2.00 per 1M output tokens, while more capable models cost substantially more [1].

The Workspace: The Context Window

If the LLM is the processor, the Context Window is its RAM (short-term memory).

The context window defines the maximum amount of text the model can consider at one time to make a prediction. This includes your system instructions, the user's question, and any documents you pasted in [2].

In the ancient days (five years ago), context windows were tiny, barely enough for a long email. Today, models boast windows of 128k or even 1 million tokens [1]. This has led to a problematic engineering pattern: the "Context Stuffing Strategy."

The Strategy: Just dump the entire PDF, codebase, or customer history into the prompt.

The Failure: The model technically accepts the input, but the application becomes unusable.

The Quadratic Scaling Challenge

This is the most critical technical concept for a non-technical leader to grasp: Latency does not scale linearly with context length [2].

If you double the length of the prompt, the model does not just take twice as long to reply. The attention mechanism at the heart of transformer models has quadratic complexity, doubling the context length requires approximately four times the computational resources [3][5] .

An LLM uses an attention mechanism where every token attends to every other token to understand relationships [4]. This creates an O(n²) computational pattern:

Processing 1,000 tokens is fast

Processing 100,000 tokens requires 10,000 times more compute operations [5]

Modern optimizations like FlashAttention have improved memory efficiency, reducing memory complexity from quadratic to linear, but they don't eliminate the fundamental computational cost. They make the process more efficient through better hardware utilization while maintaining numerically identical outputs.

We have seen enterprise chatagents where adding a few hundred words to the system prompt resulted in seconds of additional delay, making the agent feel sluggish and broken.

The Strategic Imperative: Treat Context as Precious Real Estate

The system prompt is the set of instructions defining your AI's behavior. Think of your context window as precious real estate with limited space and high carrying costs.

You cannot afford to waste your context window on irrelevant data. If you fill the window with a 50-page HR manual just to answer a question about holiday leave, you are paying for the model to process 49 pages of irrelevant information. You are:

Increasing latency (quadratic computational cost)

Increasing cost (re-reading all tokens on every turn)

Decreasing accuracy (longer contexts with irrelevant information make it harder for models to identify salient details) [6]

This creates a fundamental challenge: We have massive datasets, but a limited, expensive context window that grows increasingly costly as it fills [2].

How do we solve this? We don't teach the model everything. We don't stuff the window. We optimize through strategic retrieval.

Key Takeaways: Managing Tokens & Context Windows

Now that you understand the economics of LLM processing, here's how to apply this knowledge:

Audit Your Token Usage: Use tools like the OpenAI tokenizer to understand exactly what you're paying for. That "simple" chatbot might be re-sending the entire conversation history with every message.

Treat Context as Precious Real Estate: Don't dump entire documents into prompts. Only include the minimum necessary information to answer the query.

Design for Retrieval, Not Stuffing: Instead of pasting 50-page manuals, implement strategic retrieval systems that pull only the relevant sections.

Monitor Latency, Not Just Cost: Quadratic scaling means your app can become unusably slow before it becomes expensive. Set latency budgets (e.g., "responses must be under 2 seconds").

Optimize System Prompts: Every word in your system prompt is re-processed on every turn. Keep instructions concise and essential.

The next frontier in AI cost optimization is Retrieval-Augmented Generation (RAG), the technique that allows you to work with massive knowledge bases while keeping context windows lean, fast, and cost-effective. We'll explore this in an upcoming article.

Frequently Asked Questions (FAQ)

Q: What are tokens in LLMs and why should business leaders care?

A: Tokens are the granular units of text that LLMs process, which can be complete words like "apple" or fragments like "ing" or "the_". Approximately 1,000 tokens translate to 750 words of English text. Business leaders should care because you're billed for both input and output tokens, meaning every time your chatbot re-reads the conversation history, you're paying for it again.

Q: What is quadratic scaling and how does it impact my AI costs?

A: Quadratic scaling (O(n²)) means that when you double the input length, the computational requirements increase by four times, not just two times. This occurs because every token in the attention mechanism must attend to every other token to understand relationships. In practical terms: a 1,000-token prompt is fast, but a 100,000-token prompt requires 10,000 times more compute operations.

Q: Why does "context stuffing" (pasting entire documents) make my AI application slow?

A: Context stuffing creates three problems: (1) Increased latency due to quadratic computational cost, (2) Increased cost from re-reading all tokens on every turn, and (3) Decreased accuracy because longer contexts with irrelevant information make it harder for models to identify salient details. Adding a few hundred words to your system prompt can result in seconds of additional delay.

Q: How do I reduce token costs without sacrificing quality?

A: Implement strategic retrieval instead of context stuffing. Rather than pasting a 50-page HR manual to answer a question about holiday leave, use a retrieval system to pull only the relevant 2-3 paragraphs. This keeps your context window lean, your latency low, and your costs manageable while maintaining accuracy.

Q: What's the difference between context window size and actual usage?

A: Context window size (e.g., 128k tokens) is the maximum the model can handle. Actual usage is what you're currently sending. Just because a model supports 1 million tokens doesn't mean you should use them all. Every token you send increases cost and latency quadratically. Treat context window capacity like a budget: just because you have it doesn't mean you should spend it.

Citations

OpenAI - GPT-5 mini (https://platform.openai.com/docs/models/gpt-5-mini)

Maxim AI - Context Window Management Strategies (https://www.getmaxim.ai/articles/context-window-management-strategies-for-long-context-ai-agents-and-chatbots/)

Adaline Labs - Understanding Attention Mechanisms in LLMs (https://labs.adaline.ai/p/understanding-attention-mechanisms)

DataCamp - Attention Mechanism in LLMs: An Intuitive Explanation (https://www.datacamp.com/blog/attention-mechanism-in-llms-intuition)

arXiv - Efficient Attention Mechanisms for Large Language Models: A Survey (https://arxiv.org/abs/2507.19595)

arXiv - Core Context Aware Transformers for Long Context Modeling (https://arxiv.org/html/2412.12465v2)